If your website relies on JavaScript to load core content, I have bad news: ChatGPT and most AI crawlers can’t see it.

That has real SEO consequences - especially as LLM-powered tools (and yes, it's currently mainly ChatGPT but expect to see more "ai agents” in the future…) increasingly drive traffic and shape how users discover content.

Let me break down exactly how OpenAI’s bots behave, how Google’s crawling differ, and what you need to do to stay visible in both worlds.

AI Crawlers Don’t Execute JavaScript. Period.

Let’s cut straight to it: OpenAI’s GPTBot and ChatGPT’s browsing tool do not execute JavaScript.

They don’t run scripts.

They don’t wait for data-fetching.

They don’t interact with buttons or menus.

What they see is the raw initial HTML of the page—and nothing more.

What does that mean in practice?

- If your content is rendered into a after load, they won’t see it.

- If you use fetch() or XHR to inject text, they won’t see that either.

- Routing in SPAs (like Next.js or Vue apps) that swaps in content after page load? Completely invisible.

I’ve tested this firsthand. Feed ChatGPT a site that uses client-rendered React, and it’ll spit back boilerplate or nothing at all. Often, you’ll get a vague answer like, “The content may be JavaScript-based and couldn’t be retrieved.”

They’re blind to JavaScript. Simple as that.

ChatGPT’s Browsing Tool Is Just a Text Fetcher

When ChatGPT looks something up with its browsing tool, it fetches the page using a basic request—like curl or a simple HTTP client. Here’s what that process looks like:

- It retrieves the raw HTML.

- It doesn’t run JavaScript or wait for scripts.

- It doesn’t download external stylesheets or assets.

- It doesn’t click or scroll.

This is crucial to understand: if your page requires a browser to render correctly, ChatGPT won’t see it. It’s not a browser.

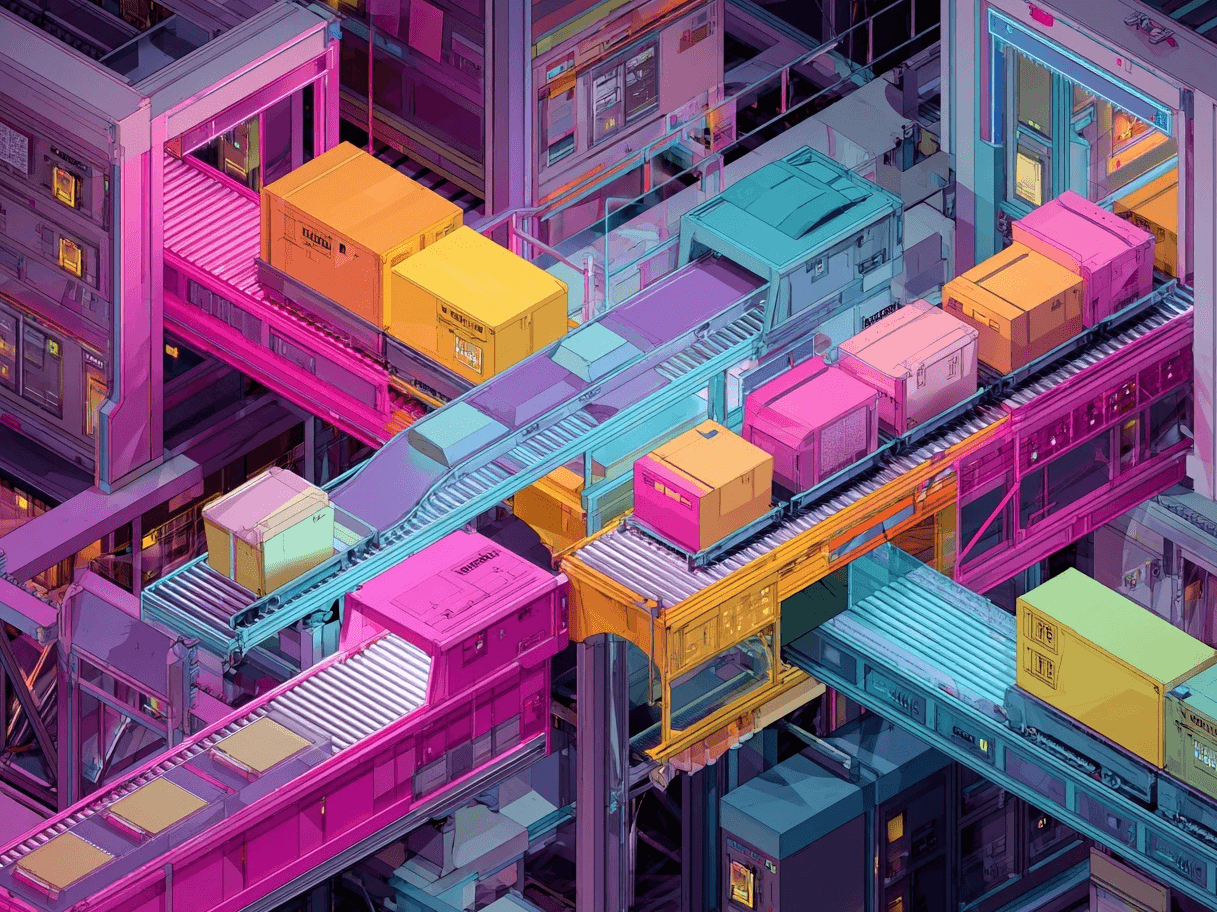

Here is a small example of how a site like Zalando looks like if Javascript is not enabled.

It might be an extreme case, but its clear that this is NOT what you want AI answers about your business or products to be based upon.

GPTBot Doesn’t Run JavaScript Either

OpenAI’s GPTBot—used to crawl and collect data for training models like GPT-4 and the next GPT-5 - has the same limitation.

It fetches static files: HTML, CSS, JS, JSON.

But it doesn’t run them.

So even though GPTBot might fetch your JavaScript files, it won’t execute them. Any content that’s dynamically injected using client-side rendering won’t make it into the training data.

In other words: JavaScript-only content won’t help you rank in ChatGPT answers.

GPTBot does respect robots.txt, so if you’ve opted out, your site won’t be included in model training. That’s a valid move—but you need to know what you’re trading away.

But Google Does Render JavaScript

Google is the exception.

Google have been around for a long time and crawling is at the core of what they do.

It's difficult to scrape the web and it's dynamic content if you cannot render javascript.

And I remember back in the days that it was very important in regards to optimizing for SEO, that there was no important content that was dependent on javascript displaying it.

Googlebot’s JavaScript processing operates in a two-wave system designed for efficiency and scale.

Here’s how it works:

Wave 1: Initial Crawl

- Googlebot fetches the raw HTML and all static resources—CSS, images, and JavaScript files—without executing any scripts.

- Only content present in this raw HTML is immediately available for indexing.

- Dynamic elements generated by JavaScript are ignored at this stage.

Wave 2: Rendering and Indexing

- Pages are queued for rendering after the initial crawl.

- Googlebot uses a headless Chromium browser to execute JavaScript, process client-side code, and fetch API data.

- The fully rendered DOM is parsed, and any new content or links are indexed.

This two-step approach balances speed and thoroughness. However, dynamic content can face delays before appearing in search results, especially on JavaScript-heavy or resource-intensive sites.

Critical information that relies solely on client-side rendering may not be discovered or ranked as quickly as static HTML.

That means Google sees your site like a real browser would—fully rendered with JavaScript content in place.

They have a whole article where they outline how it works.

Why does this matter?

Because it creates an SEO split:

If your SEO strategy only works with Google, that might be fine - for now.

But if you want your content discoverable across the growing ecosystem of AI tools, you have to optimize for static HTML.

What Content AI Crawlers Do See

To be visible to ChatGPT and similar bots:

- Render key content server-side (SSR or static generation).

- Avoid relying on JavaScript to insert titles, body text, pricing, or CTAs.

- Structure your HTML semantically, with proper use of , , etc.

- Expose public API endpoints, if relevant—and link to them directly if you want ChatGPT to see the data.

- Avoid modals, login walls, or cookie banners that block crawlers from reaching content.

Even embedding structured JSON data (e.g. a hydration blob) into the HTML can sometimes help—if it’s present on first load and readable without executing JavaScript.

Real-World Example: Why Your React App Might Be Invisible

A startup I consulted had a beautiful marketing site built in client-side React. All the content was populated via useEffect() once the app loaded.

When I tested it via curl, the HTML was a skeleton. When I asked ChatGPT to summarize the page, it returned nothing.

We fixed this by switching to Next.js with server-side rendering.

We pre-rendered core sections like headlines, feature lists, and product details. Once deployed, ChatGPT could crawl and summarize the content. A few weeks later, snippets started showing up in LLM answers.

How to Make Your Site AI-Friendly (Beyond Google)

If you want to be seen by ChatGPT, Claude, Perplexity, and future AI search tools, here’s what to do:

1. Use Server-Side Rendering (SSR)

Render your content on the server so it appears in the initial HTML response. Frameworks like Next.js, SvelteKit, and Nuxt make this doable.

2. Generate Static Pages

If your content is stable, use static site generation (SSG). Tools like Astro, Hugo, and Gatsby are built for this.

3. Don’t Rely on SPA-Only Routes

If your page routing is handled purely client-side, AI bots can’t follow it. Ensure that every major page exists as a real URL returning full HTML.

4. Test Your Site Like an AI Bot

Use this command to simulate what GPTBot sees:

curl -s https://yourdomain.com | lessOr disable JavaScript in your browser and load the page. If the main content isn’t there, AI crawlers won’t see it either.

5. Use Fallbacks

For crucial bits of content, add static sections. They’ll never be rendered in the browser, but AI bots can see them.

6. Expose Your API, If It Makes Sense

If your site uses an API to load structured content (like product data), make sure it’s publicly reachable. You can even link to those endpoints in your content.

Why ChatGPT Can’t See Your Product Listings Loaded by JavaScript

Let’s say you run an ecommerce store. Your product grid, prices, and stock levels are all loaded dynamically, maybe with React, Vue, or just some vanilla JavaScript that fetches data from your backend API. Here’s the catch: ChatGPT and most AI crawlers are completely blind to anything loaded this way.

Here’s what’s actually happening:

- When ChatGPT (or GPTBot) visits your site, it grabs the raw HTML that’s sent on the first request.

- It doesn’t wait for your scripts to run, doesn’t fetch your product data, and doesn’t see anything injected after the page loads.

- If your product listings, prices, or “Add to Cart” buttons are rendered by JavaScript, ChatGPT sees a blank slate - maybe just a header and footer.

Example:

You have a category page for “Running Shoes.” The initial HTML just has a <div id="products"></div>. JavaScript fetches the product list from your API and fills in the grid. ChatGPT? It only sees the empty <div>. No shoes, no prices, no descriptions.

What does this mean for ecommerce?

- Your latest deals, new arrivals, or even your entire catalog might be invisible to AI-powered shopping assistants and answer engines.

- If you want your products to show up in ChatGPT’s answers, or be summarized by future AI shopping tools, that data needs to be present in the HTML from the start—not just loaded after the fact.

If your ecommerce content lives behind JavaScript, you’re invisible to the next wave of AI-driven discovery. If you want your products, prices, and offers to be found by ChatGPT and similar tools, make sure they’re in the initial HTML - otherwise, you’re leaving money on the table.

Optimize for Both Worlds

Google is way ahead in AI crawling. It can render JS, scroll the page, wait for content, and feed it all to Gemini. That’s why AI Overviews can summarize your JavaScript-rich site.

But OpenAI and everyone else? Still operating like it’s 2010. If your content isn’t in the HTML, it might as well not exist to them.

So here’s the strategy:

- Use SSR or static HTML for critical content.

- Test what crawlers see.

- Structure your site for both Google and the rest of the AI ecosystem.

Because the next generation of discovery isn’t just about search engines—it’s about LLMs. And they read HTML, not JavaScript.

Want to try the #1 AI Toolkit for SEO teams?

Our AI SEO assistants helps write and optimize everything - from descriptions and articles to product feeds - so they appeal to both customers and search engine algorithms. Try it now with a free trial→